Matt Burr |

Throughout 2025, I joined dozens of client calls and facilitated Guild’s Meeting of the Minds sessions — small-format, cross-industry gatherings where leaders grapple with the future of work. Across these conversations, I heard two very different currents.

On one side, there was noise. The mention of “AI” triggered the Cocktail Party Effect: instant attention, followed by a scramble to make sense of an expanding universe of tools and capabilities. Much of the conversation felt reactive, even anxious.

But alongside that noise were quieter truths about people. How employees actually learn new tools. How human skills keep showing up as the essential counterpart to AI. How learning designers decide when (and when not) to use AI.

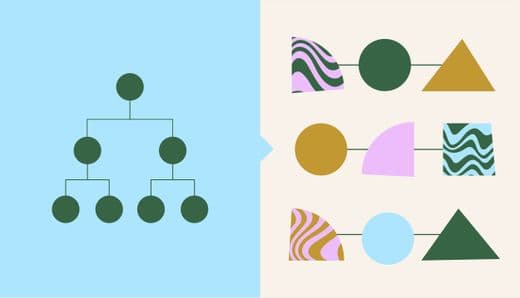

I noticed one simple dynamic: When companies focused exclusively on tools, they got stuck. When they focused on people, things began to move in a positive direction.

And yet, the investment boom suggests we’re still getting this balance wrong. In 2025, companies put an estimated $62 billion into AI. But as McKinsey reported in June, more than 80% of companies saw no material contribution to earnings from these investments. Deloitte’s CTO named even more brutal stats this week: Companies are spending 93% on tech but only 7% on people.

Between the lopsided investment and lackluster ROI, this is the AI story of the year and the backdrop for my top three learnings.

Lesson 1: People learn AI best when they’re learning something else.

A massive global consumer-packaged goods (CPG) client gave us an instructive example this year.

We embedded their proprietary AI assistant into a cohort-based academy focused on negotiation skills. The intent was simple: Use the tool as one practice exercise among many. But something unexpected happened.

More than 500 leaders not only practiced negotiation, they also learned an entirely new way to use their company’s AI tool. The discussion boards lit up with people trading prompts, examples, and insights. One learner admitted they had never touched the tool before but were now using it weekly.

Companies benefit from starting with one intuitive “gateway” tool, like ChatGPT, before introducing more specialized ones. It’s the difference between learning to walk confidently and sprinting too early.

The lesson: AI adoption increases when it’s embedded in real work, not when it’s introduced as a standalone competency. People don’t learn AI in the abstract; they learn it when it helps them accomplish something they already care about.

I’ve also found that companies benefit from starting with one intuitive “gateway” tool, like a ChatGPT or similar, before introducing more specialized ones. It’s the difference between learning to walk confidently and sprinting too early.

My takeaway: Contextual learning experiences — with real workplace application built in — accelerate AI integration far more effectively than AI training delivered in isolation.

Lesson 2: The more AI we use, the more human work becomes the differentiator.

Across industries, AI highlighted rather than diminished the need for human nuance and interpretation.

At a Meeting of the Minds session with retail leaders, I expected anxiety about displacement. Instead, I heard something else entirely: a recognition that AI’s presence actually heightens the value of human work. As one leader put it, “AI will reduce transactional work but increase the need for human leadership, trust, and loyalty.”

AI can automate transactions. It can summarize, sort, schedule, and remind. But it can’t build trust, coach a struggling associate, interpret the emotional context of a customer interaction, or decide which path aligns with the company’s values. That work becomes more important as AI scales.

We saw this with our CPG client, too. Their AI tool supporting negotiation skills only worked because humans trained it with care and nuance — modeling empathy, tone, and negotiation finesse. The tool improved because people shaped it, and people improved because the tool reflected them back with clarity.

Human inputs were the catalyst for better outputs.

My takeaway: The differentiator isn’t the tool. It’s the judgment behind the prompt, the interpretation of the output, and the interpersonal work that surrounds it.

Lesson 3: For L&D, the line between AI slop and AI genius is thinner than you think.

If there’s one thing AI did for learning designers in 2025, it was this: It made it really easy to create content. Templates, scripts, assessments, reflections — all available in seconds. But with that ease came something else. A tidal wave of mediocre content masquerading as learning.

This year, I saw more “AI slop” in the market than I ever imagined possible, things like modules that looked polished and sounded good but lacked context, nuance, or any real understanding of how people learn. And ironically, the more content AI produced, the more obvious the difference became between experiences that were generated and experiences that were designed.

There’s a razor-thin line between accelerating slop and accelerating great content — and which side you land on depends entirely on how you approach the tool. AI doesn’t determine that line; designers do.

We saw this firsthand with some of our best Guild Academy learning designers. When we empowered them to use GPT to speed up the slowest, most mechanical parts of the process, we amplified their judgment rather than replace it. Remove the human from the loop and slop is inevitable. But give the human in the loop more leverage, and a whole new world of creative possibility opens up.

The results? High-quality programs developed at 2x the usual speed. Not because AI did the thinking, but because designers had more space to do the thinking only they can do.

My takeaway: As AI makes content cheap and abundant, intentional design becomes the only reliable quality control L&D has left. And it may be the most important capability our field can cultivate.

Looking ahead: 2026 will demand more than AI adoption; it will demand alignment among people.

This year companies invested heavily in AI, but the capability to use it well lagged behind. That ought to be a painful reminder for organizations to close the gap between what they buy and how their people are equipped to use it.

My three biggest learnings this year revolve around doing just that: People learn AI best when it’s embedded in real work. Human judgment is what makes AI valuable. And for L&D, the ease of content creation raises the stakes for quality, not lowers them.

All of this becomes even more important as we enter the next phase of AI work. That is, the shift from individual experimentation to organizational redesign. A framing I heard recently captures the moment: Do we layer AI onto old processes, or do we rebuild those processes from the ground up? In reality, leaders will have to do both.

But they can’t do it without people who are aligned, confident, and equipped with the shared judgment to navigate AI-enabled systems together. That’s the real work of 2026: closing the gap between tools and teams so that investment and capability finally intersect.

If 2025 showed us the cost of misalignment, 2026 offers the chance to correct course. And that won’t come from investing in more tools. It’ll come from investing in your people to learn AI in the context of their work and giving teams the shared understanding they need to move forward together.